Now we can get to the fun part, web scraping with axios. This is part three of the Learn to Web Scrape series. This is going to build on the previous two posts, using Cheeriojs to parse html and saving the data to csv.

- The process of collecting information from a website (or websites) is often referred to as either web scraping or web crawling. Web scraping is the process of scanning a webpage/website and extracting information out of it, whereas web crawling is the process of iteratively finding and fetching web links starting from a URL or list of URLs.

- It allows you to do this from the command-line, using javascript and jQuery. It does this by using PhantomJS, which is a headless webkit browser (it has no window, and it exists only for your script's usage, so you can load complex websites that use AJAX and it will work just as if it were a real browser).

If you are going to use javascript for scraping I would suggest using your node backend to do this (assuming you are using node). Create a route that your React app can call and let your backend code do the work. Take a look at this tutorial, it's a couple of years old but should point you in the right direction. Cheerio is a Node.js library that helps developers interpret and analyze web pages using a jQuery-like syntax. In this post, I will explain how to use Cheerio in your tech stack to scrape the web. We will use the headless CMS API documentation for ButterCMS as an example and use Cheerio to extract all the API endpoint URLs from the web page.

The tools and getting started

This section I will include in every post of this series. It’s going to go over the tools that you will need to have installed. I’m going to try and keep it to a minimum so you don’t have to add a bunch of things.

Nodejs – This runs javascript. It’s very well supported and generally installs in about a minute. You’ll want to download the LTS version, which is 12.13.0 at this time. I would recommend just hitting next through everything. You shouldn’t need to check any boxes. You don’t need to do anything further with this at this time.

Visual Studio Code – This is just a text editor. 100% free, developed by Microsoft. It should install very easily and does not come with any bloatware.

You will also need the demo code referenced at the top and bottom of this article. You will want to hit the “Clone or download” button and download the zip file and unzip it to a preferred location.

Once you have it downloaded, unzipped, and with Nodejs installed, you need to open Visual Studio Code and then go File > Open Folder and select the folder where you downloaded the code.

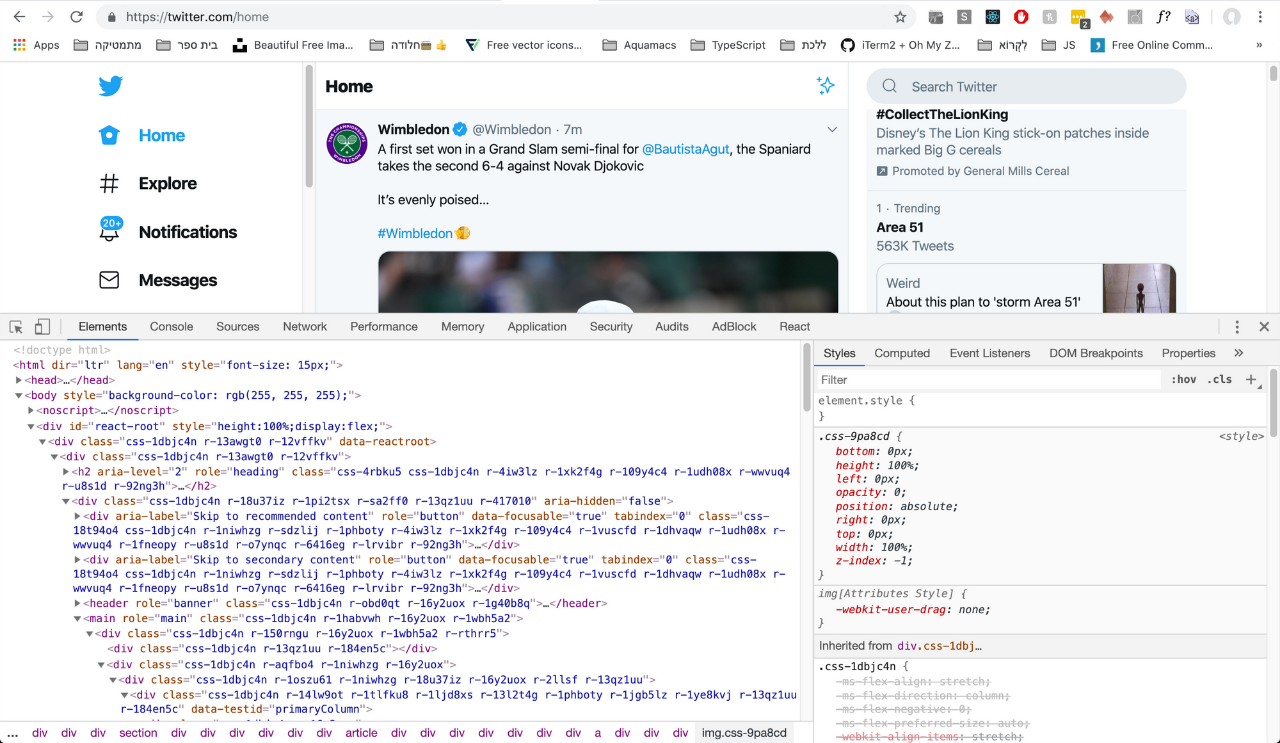

Ajax, short for Asynchronous JavaScript and XML, is is a set of web development techniques that allows a web page to update portions of contents without having to refresh the page. In fact, you don’t need to know much about Ajax to extract data. All you need is just to figure out whether the site you want to scrape uses Ajax or not.

We will also be using the terminal to execute the commands that will run the script. In order the open the terminal in Visual Studio Code you go to the top menu again and go Terminal > New Terminal. The terminal will open at the bottom looking something (but probably not exactly like) this:

It is important that the terminal is opened to the actual location of the code or it won’t be able to find the scripts when we try to run them. In your side navbar in Visual Studio Code, without any folders expanded, you should see a > src folder. If you don’t see it, you are probably at the wrong location and you need to re-open the folder at the correct location.

After you have the package downloaded and you are at the terminal, your first command will be npm install. This will download all of the necessary libraries required for this project.

Web scraping with axios

It’s web scrapin’ time! Axios is an extremely simple package that allows us to call to other web pages from our location. In our previous two posts in the series we used a sampleHtml file from which we would parse the data. In this post, we use sample html no longer!

So…after doing all the setup steps above, we just type…

And we’re done. Post over.

Didn’t believe that it was really over? Okay, fine, you’re right. There is more that I want to talk about but essentially that is it. Get the axiosResponse and pull the data from it and you’ve got your html that you want to parse with Cheeriojs.

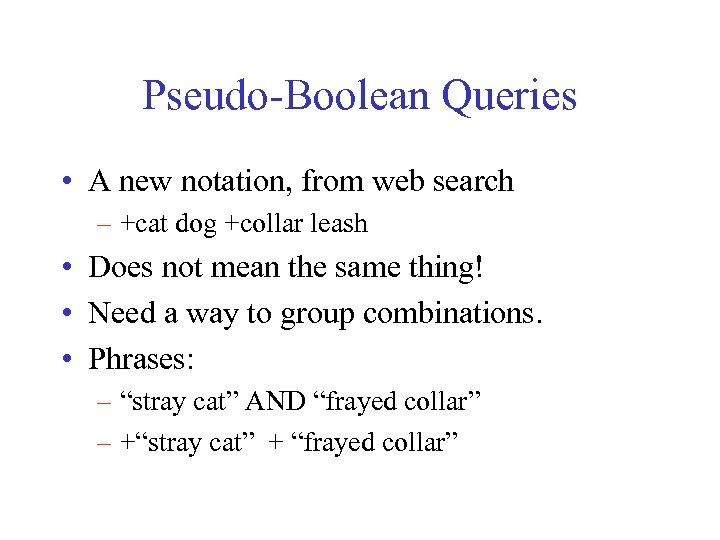

Jquery Web Scraping

Javascript promises

I don’t want to go into this in depth but a large tenant of Axios is that is promise based. Nodejs by default is asynchronous code. That means that it doesn’t wait for the code before it to finish before it starts the next bit of code. Because web requests can take varying times to complete (if a web page loads slowly, for example) we need to do something to make sure our web request is complete before we try to parse the html…that wouldn’t be there yet.

Enter promises. The promise is given (web request starts) and when using the right keywords (async/await in this case) the code pauses there until the promise is fulfilled (web request completes). This is very high level and if you want to learn more about promises, google it. There are a ton of guides about it.

The last part about promises the keyword part. There are other ways to signal to Nodejs that we are awaiting a promise completion but the one I want to talk quickly about here is async/await.

Our code this time is surrounded in an async block. It looks like this:

This tells node that within this block there will be asynchronous code and it should be prepared to handle promises that will block that code. Important! At the very end of the block it’s important to notice the (). That makes this function call itself. If you don’t have that there, you’ll run your script and nothing will happen.

Once we have a block, then inside that we can use awaits liberally. Like this:

Now. You can just enter in any url you are looking for within that axios.get('some url here') and you’re good to go! Doing web scraping and stuff!

Looking for business leads?

Helio a22. Using the techniques talked about here at javascriptwebscrapingguy.com, we’ve been able to launch a way to access awesome web data. Learn more at Cobalt Intelligence!

Introduction

The process of collecting information from a website (or websites) is often referred to as eitherweb scraping or web crawling. Web scraping is the process of scanning a webpage/website andextracting information out of it, whereas web crawling is the process of iteratively finding andfetching web links starting from a URL or list of URLs. While there are differences between the two,you might have heard the two words used interchangeably. Although this article will be a guide onhow to scrape information, the lessons learned here can very easily be used for the purposes of'crawling'.

Hopefully I don't need to spend much time talking about why we would look to scrape data from anonline resource, but quite simply, if there is data you want to collect from an online resource,scraping is how we would go about it. And if you would prefer to avoid the rigour of going througheach page of a website manually, we now have tools that can automate the process.

I'll also take a moment to add that the process of web scraping is a legal grey area. You willbe steering on the side of legal if you are collecting data for personal use and it is data that isotherwise freely available. Scraping data that is not otherwise freely available is where stuffenters murky water. Many websites will also have policies relating to how data can be used, soplease bear those policies in mind. With all of that out of the way, let's get into it.

For the purposes of demonstration, I will be scraping my own website and will be downloading a copyof the scraped data. In doing so, we will:

- Set up an environment that allows us to be able to watch the automation if we choose to (thealternative is to run this in what is known as a 'headless' browser - more on that later);

- Automating the visit to my website;

- Traverse the DOM;

- Collect pieces of data;

- Download pieces of data;

- Learn how to handle asynchronous requests;

- And my favourite bit: end up with a complete project that we can reuse whenever we want to scrapedata.

Now in order to do all of these, we will be making use of two things: Node.js, and Puppeteer. Nowchances are you have already heard of Node.js before, so we won't go into what that is, but justknow that we will be using one Node.js module: FS (File System).

Let's briefly explain what Puppeteer is.

Puppeteer

Puppeteer is a Node library which provides a high-level API to control Chrome or Chromium over theDevTools Protocol. Most things that you can do manually in the browser can be done using Puppeteer.The Puppeteer website provides a bunch of examples, such as taking screenshots and generating PDFsof webpages, automating form submission, testing UI, and so on. One thing they don't expresslymention is the concept of data scraping, likely due to the potential legal issues mentioned earlier.But as it states, anything you can do manually in a browser can be done with Puppeteer. Automatingthose things means that you can do it way, way faster than any human ever could.

This is going to be your new favourite website: https://pptr.dev/ Once you're finished with thisarticle, I'd recommend bookmarking this link as you will want to refer to their API if you plan todo any super advanced things.

Installation

If you don't already have Node installed, go to https://nodejs.org/en/download/ and install therelevant version for your computer. That will also install something called npm, which is a packagemanager and allows us to be able to install third party packages (such as Puppeteer). We will thengo and create a directory and create a package.json by typing npm init inside of the directory.Note: I actually use yarn instead of npm, so feel free to use yarn if that's what you prefer. Fromhere on, we are going to assume that you have a basic understanding of package managers such asnpm/yarn and have an understanding of Node environments. Next, go ahead and install Puppeteer byrunning npm i puppeteer or yarn add puppeteer.

Directory Structure

Okay, so after running npm init/yarn init and installing puppeteer, we currently have adirectory made up of a node_modules folder, a package.json and a package-lock.json. Nowwe want to try and create our app with some separation of concerns in mind. So to begin with, we'llcreate a file in the root of our directory called main.js. main.js will be the file that weexecute whenever we want to run our app. In our root, we will then create a folder called api.This api folder will include most of the code our application will be using. Inside of this apifolder we will create three files: interface.js, system.js, and utils.js.interface.js will contain any puppeteer-specific code (so things such as opening the browser,navigating to a page etc), system.js will include any node-specific code (such as saving data todisk, opening files etc), utils.js will include any reusable bits of JavaScript code that wemight create along the way.

Note: In the end, we didn't make use of utils.js in this tutorial so feel free to remove it ifyou think your own project will make use of it.

Basic Commands

Okay, now because a lot of the code we will be writing depends on network-requests, waiting forresponses etc, we tend to write a lot of puppeteer code asynchronous. Because of this, it is commonpractice to wrap all of your executing code inside of an async IIFE. If you're unsure what an IIFEis, it's basically a function that executes immediately after its creation. For more info,here's an article I wrote about IIFEs.To make our IIFE asynchronous, we just add the async keyword to the beginning on it like so:

Right, so we've set up our async IIFE, but so far we have nothing to run in there. Let's fix that byenabling our ability to open a browser with Puppeteer. Let's open api/interface.js and begin bycreating an object called interface. We will also want to export this object. Therefore, ourinitial boilerplate code inside of api/interface.js will look like this:

As we are going to be using Puppeteer, we'll need to import it. Therefore, we'll require() it atthe top of our file by writing const puppeteer = require('puppeteer'); Inside of our interfaceobject, we will create a function called async init() As mentioned earlier, a lot of our code isgoing to be asynchronous. Now because we want to open a browser, that may take a few seconds. Wewill also want to save some information into variables off the back of this. Therefore, we'll needto make this asynchronous so that our variables get the responses assigned to them. There are twopieces of data that will come from our init() function that we are going to want to store intovariables inside of our interface object. Because of this, let's go ahead and create twokey:value pairings inside of our interface object, like so:

Now that we have those set up, let's write a try/catch block inside of our init() function. Forthe catch part, we'll simply console.log out our error. If you'd like to handle this another way,by all means go ahead - the important bits here are what we will be putting inside of the trypart. We will first set this.browser to await puppeteer.launch(). As you may expect, this simplylaunches a browser. The launch() function can accept an optional object where you can pass in manydifferent options. We will leave it as is for the moment but we will return to this in a littlewhile. Next we will set this.page to await this.browser.newPage(). As you may imagine, this willopen a tab in the puppeteer browser. So far, this gives us the following code:

We're also going to add two more functions into our interface object. The first is a visitPage()function which we will use to navigate to certain pages. You will see below that it accepts a urlparam which will basically be the full URL that we want to visit. The second is a close() functionwhich will basically kill the browser session. These two functions look like this:

Now before we try to run any code, let's add some arguments into the puppeteer.launch() functionthat sits inside of our init() function. As mentioned before, the launch() accepts an object asits argument. So let's write the following: puppeteer.launch({headless: false}) This will meanthat when we do try to run our code, a browser will open and we will be able to see what ishappening. This is great for debugging purposes as it allows us to see what is going on in front ofour very eyes. As an aside, the default option here is headless: true and I would strongly advisethat you keep this option set to true if you plan to run anything in production as your code willuse less memory and will run faster - some environments will also have to be headless such as acloud function. Anyway, this gives us this.browser = await puppeteer.launch({headless: false}).There's also an args: [] key which takes an array as its value. Here we can add certain thingssuch as use of proxy IPs, incognito mode etc. Finally, there's a slowMo key that we can pass in toour object which we can use to slow down the speed of our Puppeteer interactions. There are manyother options available but these are the ones that I wanted to introduce to you so far. So this iswhat our init() function looks like for now (use of incognito and slowMo have been commented outbut left in to provide a visual aid):

There's one other line of code we are going to add, which isawait this.page.setViewport({ width: 1279, height: 768 });. This isn't necessary, but I wanted toput the option of being able to set the viewport so that when you view what is going on the browserwidth and height will seem a bit more normal. Feel free to adjust the width and height to bewhatever you want them to be (I've set mine based on the screen size for a 13' Macbook Pro). You'llnotice in the code block below that this setViewport function sits below the this.pageassignment. This is important because you have to set this.page before you can see its viewport.

So now if we put everything together, this is how our interface.js file looks:

Now, let's move back to our main.js file in the root of our directory and put use some of thecode we have just written. Add the following code so that your main.js file now looks like this:

Now go to your command line, navigate to the directory for your project and type node main.js.Providing everything has worked okay, your application will proceed to load up a browser andnavigate to sunilsandhu.com (or any other website if you happened to put something else in). Prettyneat! Now during the process of writing this piece, I actually encountered an error while trying toexecute this code. The error said something along the lines ofError: Could not find browser revision 782078. Run 'PUPPETEER_PRODUCT=firefox n pm install' or 'PUPPETEER_PRODUCT=firefox yarn install' to download a supported Firefox browser binary.This seemed quite strange to me as I was not trying to use Firefox and had not encountered thisissue when using the same code for a previous project. It turns out that when installing puppeteer,it hadn't downloaded a local version of Chrome to use from within the node_modules folder. I'mnot entirely sure what caused this issue (it may have been because I was hotspotting off of my phoneat the time), but managed to fix the issue by simply copying over the missing files from anotherproject I had that was using the same version of Puppeteer. If you encounter a similar issue, pleaselet me know and I'd be curious to hear more.

Advanced Commands

Okay, so we've managed to navigate to a page, but how do we gather data from the page? This bit maylook a bit confusing, so be ready to pay attention! We're going to create two functions here, onethat mimics document.querySelectorAll and another that mimics document.querySelector. Thedifference here is that our functions will return whatever attribute/attributes from the selectoryou were looking for. Both functions actually use querySelector/querySelectorAll under the hoodand if you have used them before, you might wonder why I am asking you to pay attention. The reasonhere is because the retrieval of attributes from them is not the same as it is when you'retraversing the DOM in a browser. Before we talk about how the code works, let's take a look what ourfinal function looks like:

So, we're writing another async function and we'll wrap the contents inside of a try/catch block.To begin with, we will await and return the value from an $$eval function which we have availablefor execution on our this.page value. 14t owners manual. Therefore, we're running return await this.page.$$eval().$$eval is just a wrapper around document.querySelectorAll.

There's also an $eval function available (note that this one only has 1 dollar sign), which isthe equivalent for using document.querySelector.

The $eval and $$eval functions accept two parameters. The first is the selector we want to runit again. So for example, if I want to find divelements, the selector would be 'div'. The secondis a function which retrieves specific attributes from the result of the query selection. You willsee that we are passing in two parameters to this function, the firstelementsis basically just theentire result from the previous query selection. The second is an optional value that we havedecided to pass in, this beingattribute.

We then map over our query selection and find the specific attribute that we passed in as theparameter. You'll also notice that after the curly brace, we pass in the attributeagain, which isnecessary because when we use$$evaland$eval, it executes them in a different environment (thebrowser) to where the initial code was executed (in Node). When this happens, it loses context.However, we can fix this by passing it in at the end. This is simply a quirk specific to Puppeteerthat we have to account for.

With regard to our function that simply returns one attribute, the difference between the code isthat we simply return the attribute value rather than mapping over an array of values. Okay, so weare now in a position where we are able to query elements and retrieve values. This puts us in agreat position to now be able to collect data.

So let's go back into our main.js file. I've decided that I would like to collect all of the linksfrom my website. Therefore, I'll use the querySelectorAllAttributes function and will pass in twoparameters: 'a' for the selector in order to get all of the <a> tags, then 'href' for theattribute in order to get the link from each <a> tag. Let's see how that code looks:

Let's run node main.js again. If you already have it running from before, type cmd+c/ctrl+cand hit enter to kill the previous session. In the console you should be able to see a list of linksretrieved from the website. Tip: What if you wanted to then go and visit each link? Well you couldsimply write a loop function that takes each value and passes it in to our visitPage function. Itmight look something like this:

Saving data

Great, so we are able to visit pages and collect data. Let's take a look at how we can save thisdata. Note: There are of course, many options here when it comes to saving data, such as saving to adatabase. We are, however, going to look at how we would use Node.js to save data locally to ourhard drive. If this isn't of interest to you, you can probably skip this section and swap it out forwhatever approach you'd prefer to take.

Let's switch gears and go into our empty system.js file. We're just going to create onefunction. This function will take three parameters, but we are going to make two of them optional.Let's take a look at what our system.js file looks like, then we will review the code:

So the first thing you will notice is that we are requiring an fs module at the top. This is aNode.js-specific module that is available to you as long as you have Node installed on your device.We then have our system object and we are exporting it at the bottom, this is the same process wefollowed for the interface.js file earlier.

Web Scraping Using Jsp

Conclusion

Web Scraping Using Js

And there we have it! We have created a new project from scratch that allows you to automate thecollection of data from a website. We have gone through each of the steps involved, from initialinstallation of packages, right up to downloading and saving collected data. You now have a projectthat allows you to input any website and collect and download all of the links from.

Website Scraping Using Js

Hopefully the methods we have outlined provide you with enough knowledge to be able to adapt thecode accordingly (eg, if you want to gather a different HTML tag besides <a> tags).

What will you be using this newfound information for? I'd love to hear, so be sure toreach out to me over Twitter to let me know :)

GitHub

Web Scraping Using Js Download

For anyone who is interested in checking out the code used in this article, I have put together asmall package called Scrawly that can be found on GitHub. Here's the link:https://github.com/sunil-sandhu/scrawly